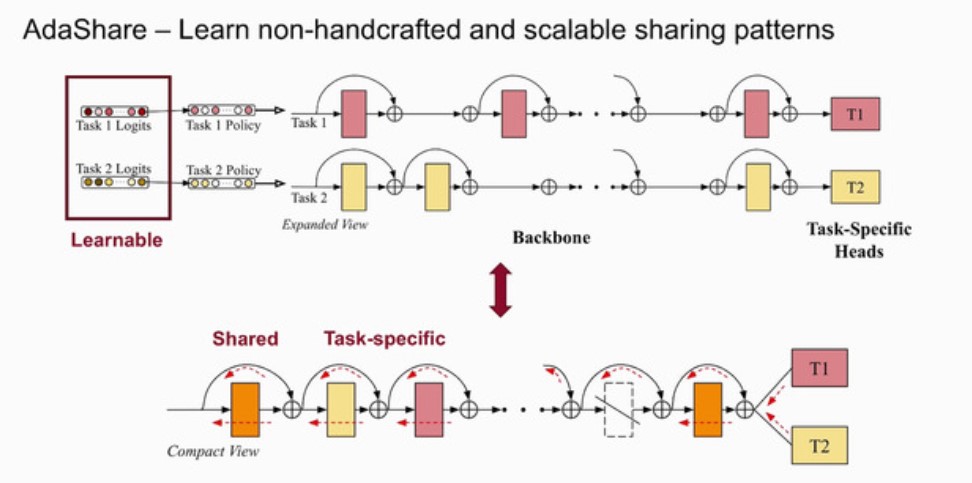

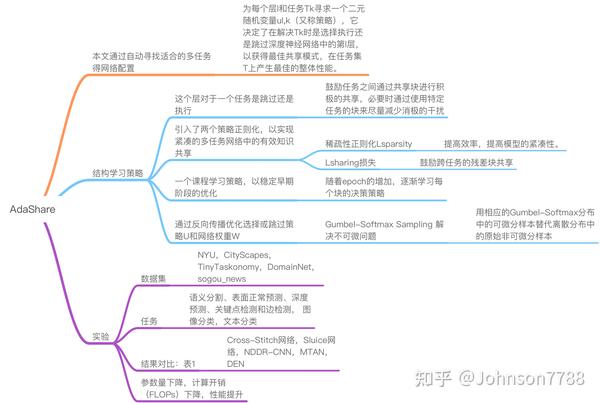

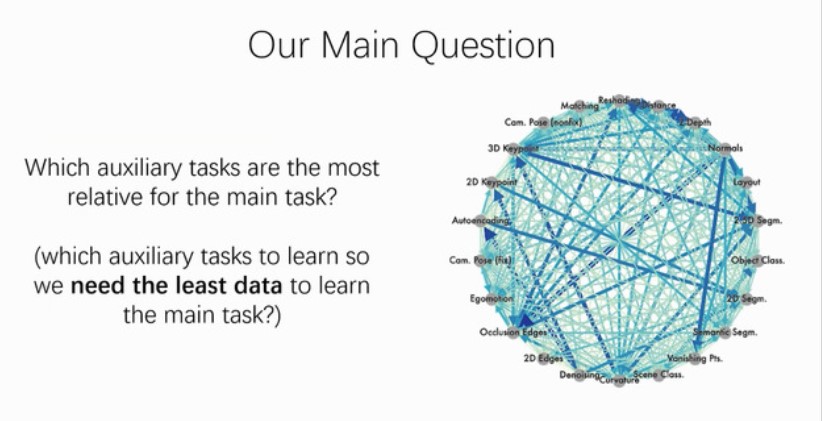

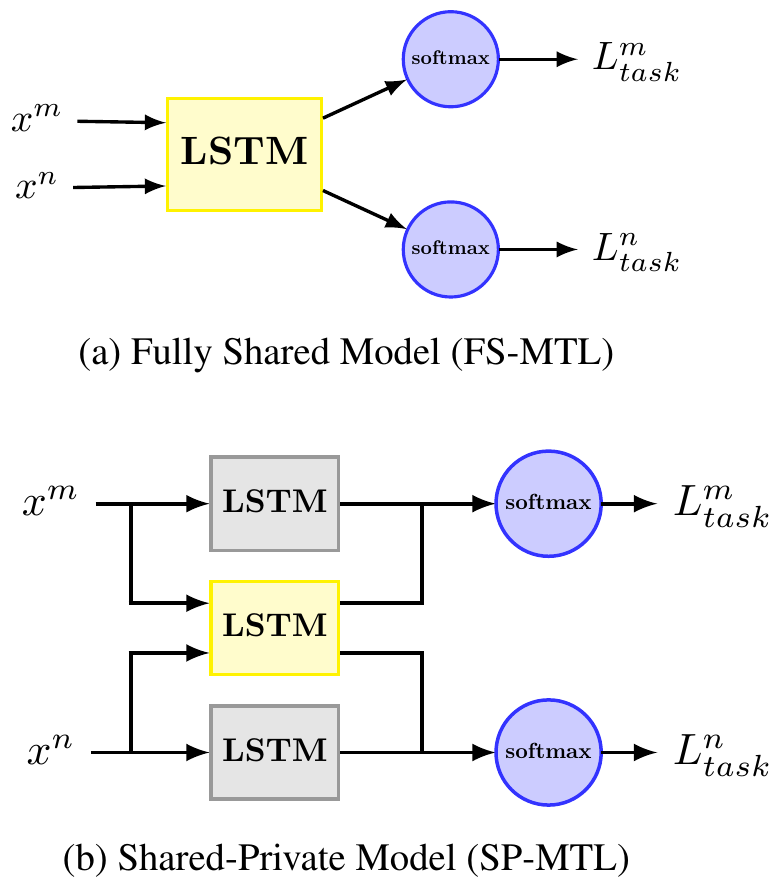

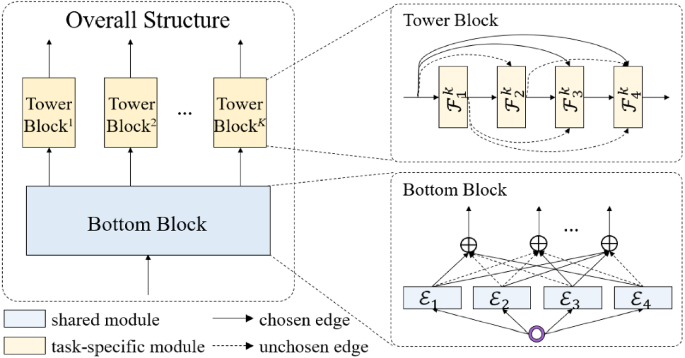

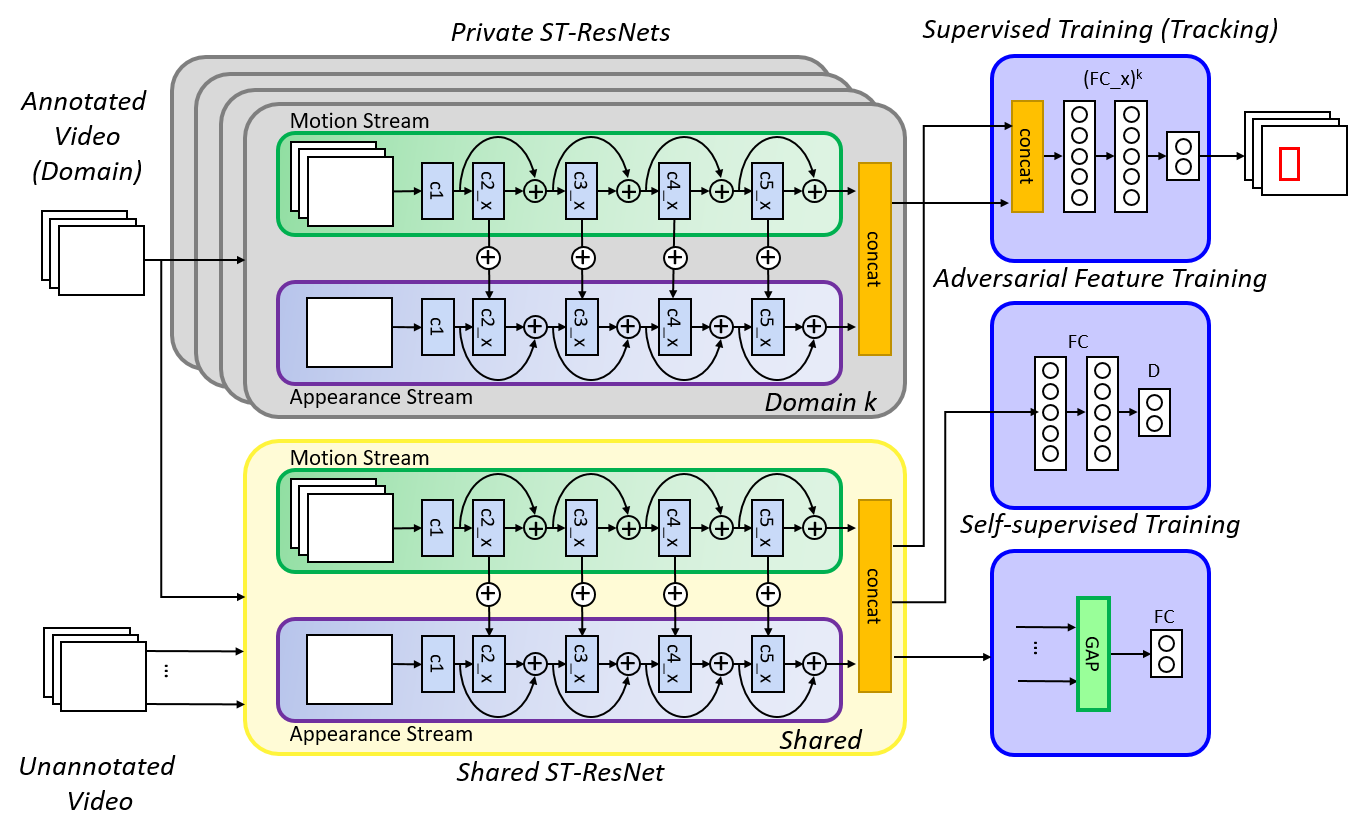

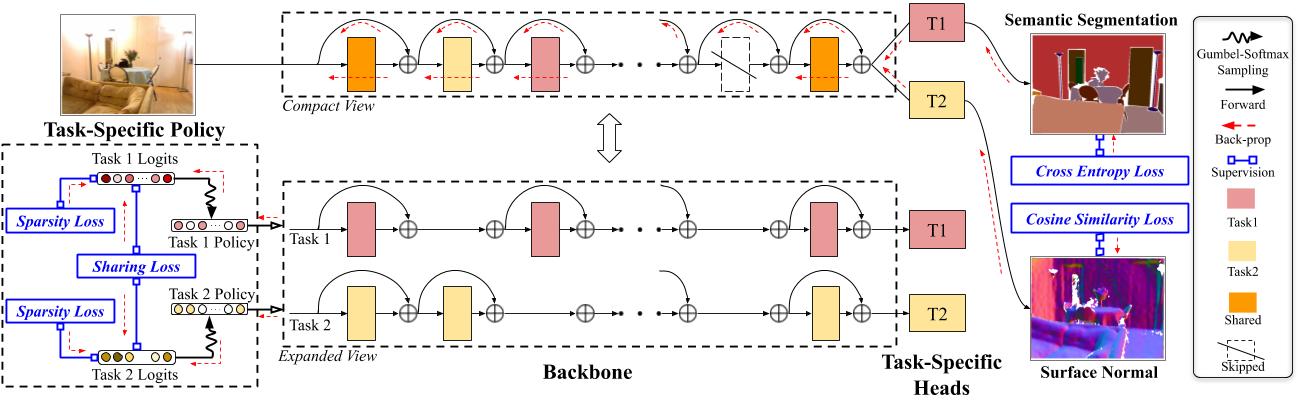

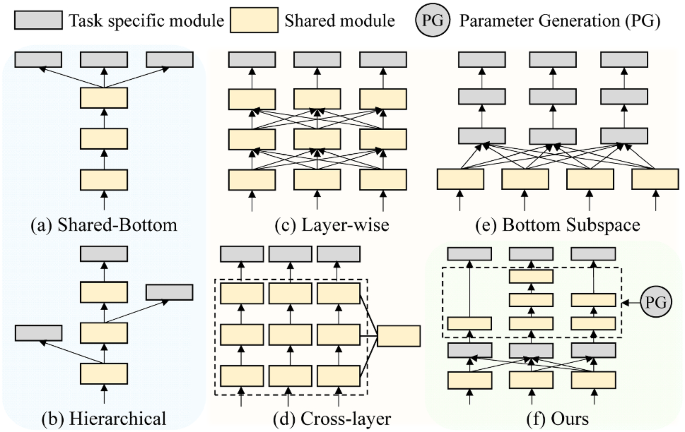

AdaShare Learning What To Share For Efficient Deep MultiTask Learning (NeurIPS ) AdaShare is a novel and differentiable approach for efficient multitask learning that learns the feature sharing pattern to achieve the best recognition accuracy, while restricting the memory footprint as much as possible Our main idea is to learn the sharing pattern through aMultiTask LearningEdit MultiTask Learning 442 papers with code • 4 benchmarks • 39 datasets Multitask learning aims to learn multiple different tasks simultaneously while maximizing performance on one or all of the tasks ( Image credit Crossstitch Networks for Multitask Learning )AdaShare is a novel and differentiable approach for efficient multitask learning that learns the feature sharing pattern to achieve the best recognition accuracy, while restricting the memory footprint as much as possible Our main idea is to learn the sharing pattern through a taskspecific policy that selectively chooses which layers to execute for a given task in the multitask network

Multi Task Learning And Beyond Past Present And Future Programmer Sought

Adashare learning what to share for efficient deep multi-task learning

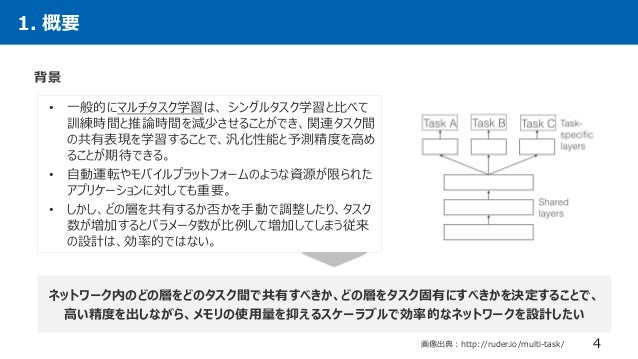

Adashare learning what to share for efficient deep multi-task learning- 이번에는 NIPS Poster session에 발표된 논문인 AdaShare Learning What To Share For Efficient Deep MultiTask Learning 을 리뷰하려고 합니다 논문은 링크를 참조해주세요 Background and Introduction 우선 Mutlitask learning 이라는 게 어떤 것일까요?Title AdaShare Learning What To Share For Efficient Deep MultiTask Learning Authors Ximeng Sun, Rameswar Panda, Rogerio Feris, Kate Saenko (Submitted on , last revised (this version, v2)) Abstract Multitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep neural networks

Rpand002 Github Io Data Neurips Pdf

Knowledge Evolution in Neural Networks 논문 리뷰;AdaShare Learning What To Share For Efficient Deep MultiTask Learning (NIPS ) 논문 리뷰; AdaShare Learning What To Share For Efficient Deep MultiTask Learning 19年12月13 日 年01月10日 kawanokana dls19, papers 共有 クリックして Twitter で共有 (新しいウィンドウで開きます) Facebook で共有するにはクリックしてください (新しいウィンドウで開きます) クリックして Google で共有 (新しいウィンドウで

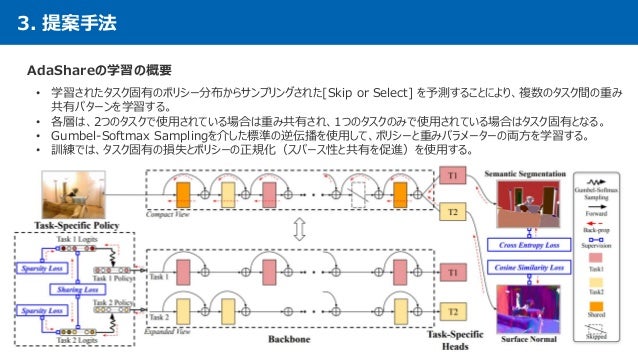

Multitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep neural networks is either throughManifold Regularized Dynamic Network Pruning (CVPR 21) 논문 리뷰 카테고리 없음 21 7 1 1703 Posted by woojeong 이번 글에서는 CVPR 21에 accept된 Pruning 논문 중 하나인 Manifold Regularized AdaShare:マルチタスク学習のための新しいアプローチ Computer Visionにおける効率的なマルチタスク学習のための新しいアプローチを提案。 DL輪読会AdaShare Learning What To Share For Efficient Deep MultiTask Learning from Deep Learning JP 更新情報はTwitterから配信中! Follow @deepsquare3 株式会社Present Squareでは

1Boston University, 2MITIBM Watson AI Lab, IBM Research {sunxm, saenko}@buedu, {rpanda@, rsferis@us}ibmcom Abstract Multitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learningAdaShare Learning What To Share For Efficient Deep MultiTask Learning Ximeng Sun, Rameswar Panda, Rogerio Feris, Kate Saenko Poster Session 4 (more posters) on T T Toggle Abstract Paper (in Proceedings / pdf) Abstract Multitask learning is an open and challenging problem in computer vision The typical way of conducting multitaskEfficient Multitask Deep Learning Principal Investigators Klaus Obermayer Team members Heiner Spie ß (doctoral researcher) Developing deeplearning methods Research Unit 3, SCIoI Project 15 Deep learning excels in constructing hierarchical representation from raw data for robustly solving machine learning tasks – provided that data is sufficient It is a common

Learned Weight Sharing For Deep Multi Task Learning By Natural Evolution Strategy And Stochastic Gradient Descent Deepai

How To Do Multi Task Learning Intelligently

Network Clustering for Multitask Learning ∙ by Dehong Gao, et al ∙ 0 ∙ share The MultiTask Learning (MTL) technique has been widely studied by wordwide researchers The majority of current MTL studies adopt the hard parameter sharing structure, where hard layers tend to learn general representations over all tasks and AdaShare Learning What To Share For Efficient Deep MultiTask Learning #1517 Open icoxfog417 opened this issue 1 comment Open AdaShare Learning What To Share For Efficient Deep MultiTask Learning #1517 icoxfog417 opened this issue 1 comment Labels CNN ComputerVision Comments Copy link Quote reply Member icoxfog417近期 Multitask Learning (MTL) 的研究进展有着众多的科研突破,和许多有趣新方向的探索。这激起了我极大的兴趣来写一篇新文章,尝试概括并总结近期 MTL 的研究进展,并探索未来对于 MTL 研究其他方向的可能。 这 首发于 深度学习 写文章 Multitask Learning and Beyond 过去,现在与未来 刘诗昆 在涅贵

2

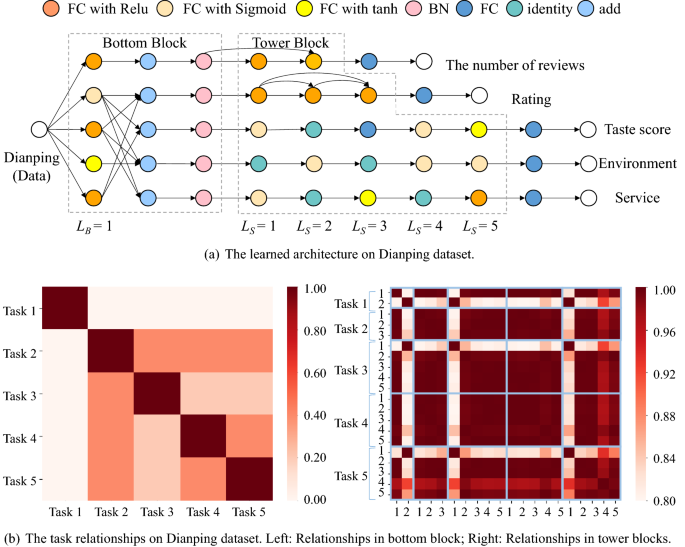

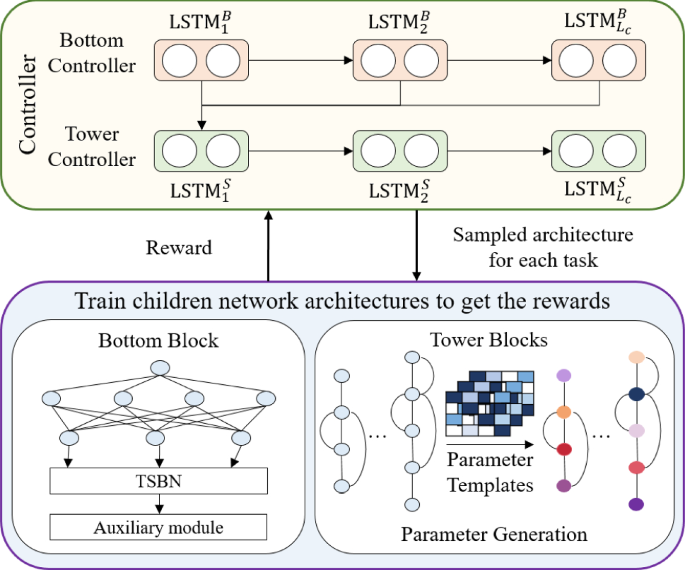

Deep Multi Task Learning With Flexible And Compact Architecture Search Springerlink

Figure 6 Change in Pixel Accuracy for Semantic Segmentation classes of AdaShare over MTAN (blue bars) The class is ordered by the number of pixel labels (the black line) Compare to MTAN, we improve the performance of most classes including those with less labeled data "AdaShare Learning What To Share For Efficient Deep MultiTask Learning" This is known as Multitask learning (MTL) In this article, we discuss the motivation for MTL as well as some use cases, difficulties, and recent algorithmic advances Motivation for MTL There are various reasons that warrant the use of MTL We know machine learning models generally require a large volume of data for training However, we often end up with many tasksAdaShareLearningWhatToShareForEfficientDeepMultiTaskLearning XimengSun1;2 RameswarPanda2 RogerioFeris2 1BostonUniversity 2IBMResearch&MITIBMWatsonAILab Abstract

Pdf Adashare Learning What To Share For Efficient Deep Multi Task Learning Semantic Scholar

Adashare Learning What To Share For Efficient Deep Multi Task Learning Deepai

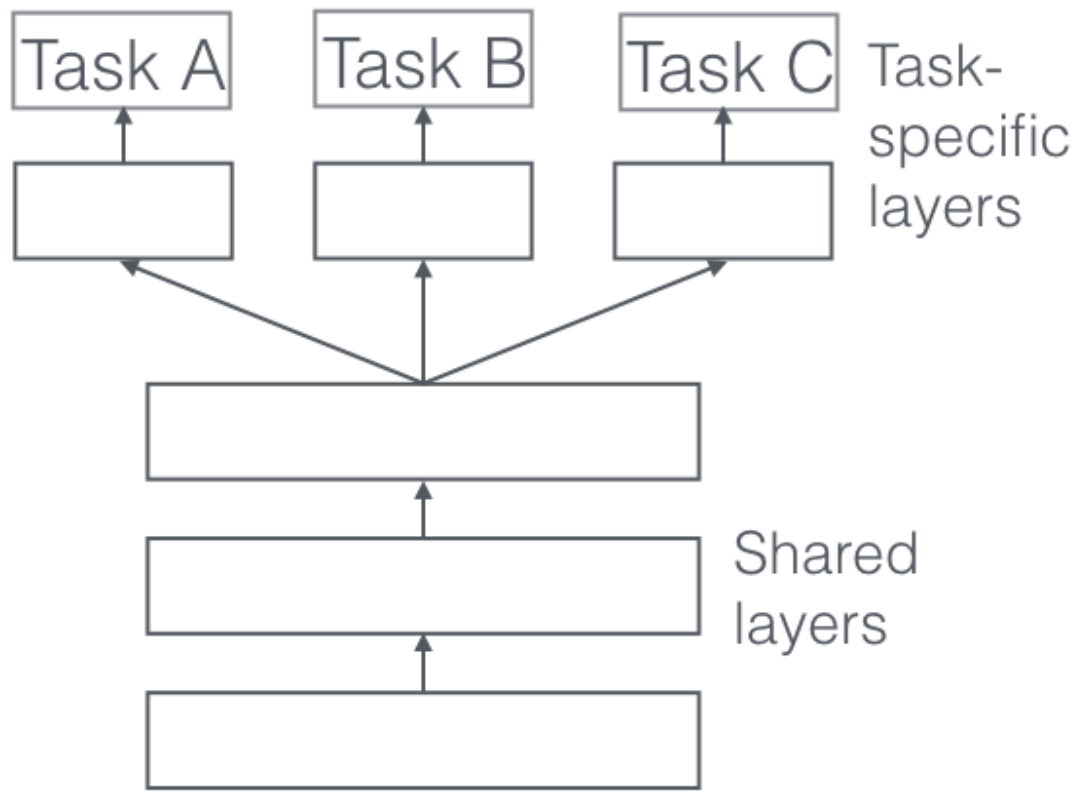

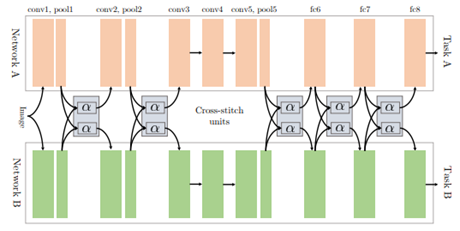

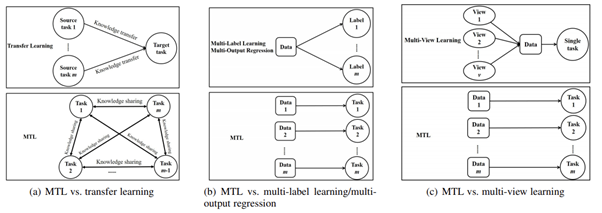

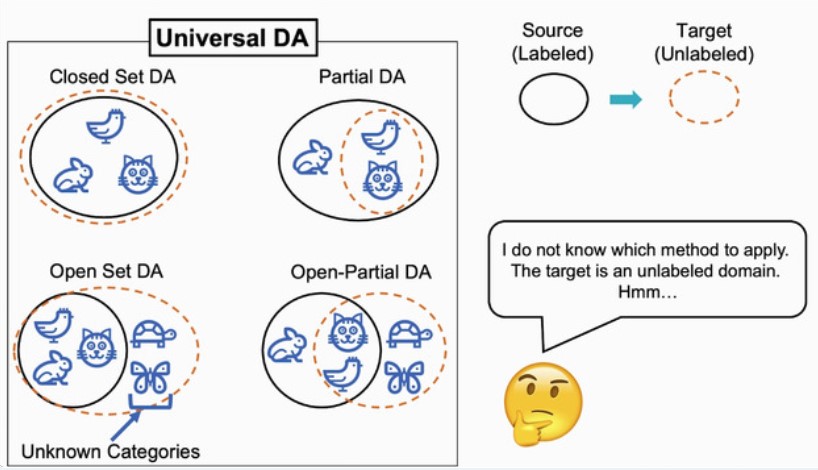

AdaShare Learning What To Share For Efficient Deep MultiTask Learning Introduction Hardparameter Sharing AdvantagesScalable DisadvantagesPreassumed tree structures, negative transfer, sensitive to task weights Softparameter Sharing AdvantagesLessnegativeinterference (yet existed), better performance Disadvantages Not ScalableMultitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep neural networks is either through handcrafted schemes that share all initial layers and branch out at an adhoc point, or through separate taskspecific networks with an additional feature sharing/fusion mechanism Unlike existing methods, we propose an adaptive sharing approach, calledAdaShare, that decides what to shareAdaShare Learning What To Share For Efficient Deep MultiTask Learning NeurIPS Download paper Abstract Multitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep neural networks is either through handcrafted schemes that share all initial layers and branch out at an adhoc point, or through separate task

Openaccess Thecvf Com Content Cvpr21w Ntire Papers Jiang Png Micro Structured Prune And Grow Networks For Flexible Image Restoration Cvprw 21 Paper Pdf

Pdf Dselect K Differentiable Selection In The Mixture Of Experts With Applications To Multi Task Learning

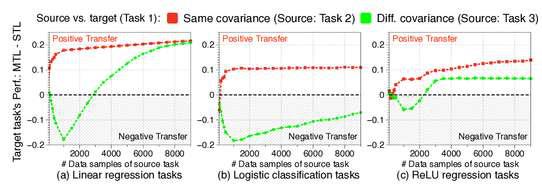

AdaShare Learning What To Share For Efficient Deep MultiTask Learning In Hugo Larochelle , Marc'Aurelio Ranzato , Raia Hadsell , MariaFlorina Balcan , HsuanTien Lin , editors, Advances in Neural Information Processing Systems 33 Annual Conference on Neural Information Processing Systems , NeurIPS , December 612, , virtualMultiTask Learning Theory, Algorithms, and Applications (Lawrence and Platt, ICML 04) an efficient method is proposed to learn the parameters (of a shared covariance function) for the Gaussian process •adopts the multitask informative vector machine (IVM) to greedily select the most informative examples from the separate tasks and hence alleviate the computation cost The typical way of conducting multitask learning with deep neural networks is either through handcrafted schemes that share all initial layers and branch out at an adhoc point, or through separate taskspecific networks with an additional feature sharing/fusion mechanism Unlike existing methods, we propose an adaptive sharing approach, called AdaShare, that

Deep Multi Task Learning With Flexible And Compact Architecture Search Springerlink

Research Google Pubs Pub Pdf

Sunxm2357/AdaShare 45 Mark the official implementation from paper authors × sunxm2357/AdaShare 45 There is no official implementationMultitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep neural networks is either through handcrafting schemes that share all initial layers and branch out at an adhoc point or through using separate taskspecific networks with an additional feature sharing/fusion mechanism Unlike existing methods, weMultitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep neural networks is either through handcrafted schemes that share all initial layers and branch out at an adhoc point, or through separate taskspecific networks with an additional feature sharing/fusion mechanism

Kate Saenko Proud Of My Wonderful Students 5 Neurips Papers Come Check Them Out Today Tomorrow At T Co W5dzodqbtx Details Below Buair2 Bostonuresearch

Pdf Adashare Learning What To Share For Efficient Deep Multi Task Learning Semantic Scholar

AdaShare Learning What To Share For Efficient Deep MultiTask Learning (Supplementary Material) Ximeng Sun 1Rameswar Panda 2Rogerio Feris2 Kate Saenko; 이하 글들은 (https//kdstrekr)에 게재한 글과 동일 저자가 작성한 글임을 밝힙니다 이번에는 NIPS Poster session에 발표된 논문인 AdaShare Learning What To Share For Efficient Deep MultiTask AdaShare Learning What To Share For Efficient Deep MultiTask Learning (NeurIPS ) Introduction AdaShare is a novel and differentiable approach for efficient multitask learning that learns the feature sharing pattern to achieve the best recognition accuracy, while restricting the memory footprint as much as possible Our main idea is to learn the sharing pattern through a task

Papertalk The Platform For Scientific Paper Presentations

Multi Task Learning學習筆記 紀錄學習mtl過程中讀過的文獻資料 By Yanwei Liu Medium

Learning What To Share For Efficient Deep MultiTask Learning AdaShare is a novel and differentiable approach for efficient multitask learning that learns the feature sharing pattern to achieve the best recognition accuracy 10 August 21 3D Investigating Attention Mechanism in 3D Point Cloud Object Detection This repository is for the following paper Title AdaShare Learning What To Share For Efficient Deep MultiTask Learning Authors Ximeng Sun, Rameswar Panda, Rogerio Feris Download PDF Abstract Multitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep neural networks is either through handcrafting schemes that share The typical way of conducting multitask learning with deep neural networks is either through handcrafted schemes that share all initial layers and branch out at an adhoc point, or through separate taskspecific networks with an additional feature sharing/fusion mechanism Unlike existing methods, we propose an adaptive sharing approach, called AdaShare, that

Adashare Learning What To Share For Efficient Deep Multi Task Learning Arxiv Vanity

Adashare Learning What To Share For Efficient Deep Multi Task Learning Deepai

Request PDF AdaShare Learning What To Share For Efficient Deep MultiTask Learning Multitask learning is an open and challenging problem in The typical way of conducting multitask learning with deep neural networks is either through handcrafting schemes that share all initial layers and branch out at an adhoc point or through using separate taskspecific networks with an additional feature sharing/fusion mechanism Unlike existing methods, we propose an adaptive sharing approach, calledGiteecom(码云) 是 OSCHINANET 推出的代码托管平台,支持 Git 和 SVN,提供免费的私有仓库托管。目前已有超过 600 万的开发者选择 Gitee。

Dl輪読会 Adashare Learning What To Share For Efficient Deep Multi Task

Multi Task Learning And Beyond 过去 现在与未来 知乎

AdaShare Learning What To Share For Efficient Deep MultiTask Learning Click To Get Model/Code Multitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep neural networks is either through handcrafting schemes that share all initial layers and branch out at an adhoc point or through using separate taskAdaShare Learning What To Share For Efficient Deep MultiTask Learning Ximeng Sun 1Rameswar Panda 2Rogerio Feris Kate Saenko;CiteSeerX Scientific articles matching the query AdaShare Learning What To Share For Efficient Deep MultiTask Learning

Http Proceedings Mlr Press V119 Guoe Guoe Pdf

Arxiv Org Pdf 09

AdaShare Learning What To Share For Efficient Deep MultiTask Learning By Ximeng Sun, Rameswar Panda, Rogerio Feris and Kate Saenko Get PDF (2 MB) Abstract Multitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep neural networks is either through handcrafted schemes that share all initial Graph Structured MultiTask Regression and An Efficient Optimization Method for General Fused Lasso Thrun, S et al1996 Discovering Structure in Multiple Learning Tasks The TC Algorithm ICML1998 21 Ando, R, K et al 05 A Framework for Learning Predictive Structures from Multiple Tasks and Unlabeled Data JMLR05 22 Heskes, T 00 Empirical Bayes for Learning 書誌情報 2 AdaShare Learning What To Share For Efficient Deep MultiTask Learning (https//arxivorg/abs/) タイトル: 著者: Ximeng Sun, Rameswar Panda, Rogerio Feris ( Boston University, IBM Research & MITIBM Watson AI Lab) • 効率的なマルチタスク学習のための新しいアプローチを提案。 • マルチタスクネットワークにおいて、与えられたタスクに対

Arxiv Org Pdf 09

Openreview Net Pdf Id Howqizwd 42

Accelerating Deep Learning Workloads through Efficient MultiModel Execution Deepak Narayanan , Keshav Santhanam , Amar Phanishayeey, Matei Zaharia Stanford University yMicrosoft Research Abstract Deep neural networks (DNNs) with millions of parameters are increasingly being applied across a variety of domains GPUs the compute platform of choice for DNNs haveMultitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep neural networks is either through handcrafting schemes that share all initial layers and branch out at an adhoc point or through using separate taskspecific networks with an additional feature sharing/fusion mechanismMultitask learning is an open and challenging problem in computer vision Unlike existing methods, we propose an adaptive sharing approach, called AdaShare, that decides what to share across which tasks

Papertalk

Auto Virtualnet Cost Adaptive Dynamic Architecture Search For Multi Task Learning Sciencedirect

Intuition behind MultiTask Learning (MTL) By using Deep learning models, we usually aim to learn a good representation of the features or attributes of the input data to predict a specific value Formally, we aim to optimize for a particular function by training a model and finetuning the hyperparameters till the performance can't be increased further By using MTL, it1Boston University, 2MITIBM Watson AI Lab, IBM Research {sunxm, saenko}@buedu, {rpanda@, rsferis@us}ibmcom Project Page https//cspeoplebuedu/sunxm/AdaShare/projecthtml

Rpand002 Github Io Data Neurips Pdf

How To Do Multi Task Learning Intelligently

2

Papertalk The Platform For Scientific Paper Presentations

Learning What To Share For Efficient Deep Multi Task Learning

Papertalk

Adashare 高效的深度多任务学习 知乎

Pdf Adashare Learning What To Share For Efficient Deep Multi Task Learning Semantic Scholar

Multimodal Learning Archives Mit Ibm Watson Ai Lab

Openreview Net Pdf Id Tpfhiknddkx

Pdf Adashare Learning What To Share For Efficient Deep Multi Task Learning Semantic Scholar

Kate Saenko Proud Of My Wonderful Students 5 Neurips Papers Come Check Them Out Today Tomorrow At T Co W5dzodqbtx Details Below Buair2 Bostonuresearch

Adashare Learning What To Share For Efficient Deep Multi Task Learning Pythonrepo

Adashare Learning What To Share For Efficient Deep Multi Task Learning Arxiv Vanity

Dl輪読会 Adashare Learning What To Share For Efficient Deep Multi Task

Cs People Bu Edu Sunxm Adashare Neurips Slides Pdf

Arxiv Org Pdf 1905

Arxiv Org Pdf 09

Adashare Learning What To Share For Efficient Deep Multi Task Learning Pythonrepo

1

Multi Task Learning In Computer Vision Must Reading Aminer

Kate Saenko On Slideslive

Learning To Branch For Multi Task Learning Deepai

Dl Acm Org Doi Pdf 10 1145

Rpand002 Github Io Data Neurips Pdf

Pdf Adashare Learning What To Share For Efficient Deep Multi Task Learning Semantic Scholar

Multi Task Learning In Computer Vision Must Reading Aminer

Multi Task Learning And Beyond Past Present And Future Programmer Sought

Auto Virtualnet Cost Adaptive Dynamic Architecture Search For Multi Task Learning Sciencedirect

Learning What To Share Between Loosely Related Tasks Arxiv Vanity

Adashare 高效的深度多任务学习 知乎

Adashare Learning What To Share For Efficient Deep Multi Task Learning Issue 1517 Arxivtimes Arxivtimes Github

Computer Vision Archives Mit Ibm Watson Ai Lab

Deep Multi Task Learning With Flexible And Compact Architecture Search Springerlink

1

Dl輪読会 Adashare Learning What To Share For Efficient Deep Multi Task

Adashare Learning What To Share For Efficient Deep Multi Task Learning Request Pdf

Pdf Dselect K Differentiable Selection In The Mixture Of Experts With Applications To Multi Task Learning

Rpand002 Github Io Data Neurips Pdf

Http Www2 Agroparistech Fr Ufr Info Membres Cornuejols Teaching Master Aic Projets M2 Aic Projets 21 Learning To Branch For Multi Task Learning Pdf

Dl Acm Org Doi Pdf 10 1145

Rpand002 Github Io Data Neurips Pdf

Http Proceedings Mlr Press V119 Guoe Guoe Pdf

How To Do Multi Task Learning Intelligently

Rpand002 Github Io Data Neurips Pdf

Dl輪読会 Adashare Learning What To Share For Efficient Deep Multi Task

Learning To Branch For Multi Task Learning Deepai

Kdst Adashare Learning What To Share For Efficient Deep Multi Task Learning Nips 논문 리뷰

Multi Task Learning And Beyond Past Present And Future Programmer Sought

Deep Multi Task Learning With Flexible And Compact Architecture Search Springerlink

Openreview Net Pdf Id Pauvowaxtar

Http Proceedings Mlr Press V119 Guoe Guoe Pdf

Auto Virtualnet Cost Adaptive Dynamic Architecture Search For Multi Task Learning Sciencedirect

2

Dl Acm Org Doi Pdf 10 1145

Kdst Adashare Learning What To Share For Efficient Deep Multi Task Learning Nips 논문 리뷰

1

Cs People Bu Edu Sunxm Adashare Adashare Poster Pdf

Cs People Bu Edu Sunxm Adashare Neurips Slides Pdf

Multi Task Learning And Beyond Past Present And Future Programmer Sought

Papertalk

Openaccess Thecvf Com Content Cvpr21w Ntire Papers Jiang Png Micro Structured Prune And Grow Networks For Flexible Image Restoration Cvprw 21 Paper Pdf

Rpand002 Github Io Data Neurips Pdf

Adashare Learning What To Share For Efficient Deep Multi Task Learning Papers With Code

Kate Saenko Proud Of My Wonderful Students 5 Neurips Papers Come Check Them Out Today Tomorrow At T Co W5dzodqbtx Details Below Buair2 Bostonuresearch

논문 리뷰 Adashare Learning What To Share For Efficient Deep Multi Task Learning Nips

Deep Multi Task Learning With Flexible And Compact Architecture Search Springerlink

Arxiv Org Pdf 09

Pdf Adashare Learning What To Share For Efficient Deep Multi Task Learning Semantic Scholar

How To Do Multi Task Learning Intelligently

Dl輪読会 Adashare Learning What To Share For Efficient Deep Multi Task

Pdf Adashare Learning What To Share For Efficient Deep Multi Task Learning Semantic Scholar

Kate Saenko On Slideslive

2

Http Proceedings Mlr Press V119 Guoe Guoe Pdf

Pdf Stochastic Filter Groups For Multi Task Cnns Learning Specialist And Generalist Convolution Kernels

Cs People Bu Edu Sunxm Adashare Neurips Slides Pdf

Home Rogerio Feris

Adashare Learning What To Share For Efficient Deep Multi Task Learning Arxiv Vanity

0 件のコメント:

コメントを投稿